FOX Sports on the Converging Worlds of Cinema and Live Sport Production

February 18, 2022

Last summer, MLB and FOX Sports’ Emmy-Award winning broadcast team channeled the magic of Universal Pictures’ classic film “Field of Dreams” to showcase a special matchup between the New York Yankees and Chicago White Sox. The game transpired on a custom field built only 1000 feet from the original film set in Dyersville, IA – separated by a corn field – and marked an incredible feat for sport. The live broadcast combined film and live production techniques to achieve a more cinematic look that dazzled fans. Brad Cheney, VP of Field Operations and Engineering at FOX Sports, took the time to chat with us about the project, and what it could signal for the future of sports production. Continue reading for interview highlights:

Describe your background, role at FOX Sports, and what you enjoy about your job.

I’m one of the lucky few who knew what I wanted to do from a young age. In high school, I participated in TV classes, then honed my skills at the University of Hartford in Connecticut. While in school, I landed a job at a local FOX affiliate, as a technician and got the ability to learn all aspects of live production. Following that, I worked at Cox Sports, Game Creek Video, The Late Show with David Letterman, and MLB Network before ending up at FOX Sports eight years ago. I’m currently tasked with the operations and engineering behind MLB, USFL and college sports, and love that we get to collaborate with the entire production team to figure out how to technically execute the vision. I enjoy the synergy of working with such a smart, creative team; developing solutions from scratch; and watching the result unfold for audiences at home. The fact that I also get to work on some of my favorite sports doesn’t hurt either; it’s a perfect marriage of my skills and hobbies.

What was it like working on the “Field of Dreams” production?

“Field of Dreams” is an incredible movie with venerable film characters. Its story transcends baseball and is near and dear to anyone who grew up playing sports, so working on this project was amazing. At the same time, it was challenging because the team had to build an MLB-grade field from the ground up in the middle of Iowa, near the original film set. It took more than a year of planning and six months to build the field, surrounding village, and production infrastructure.

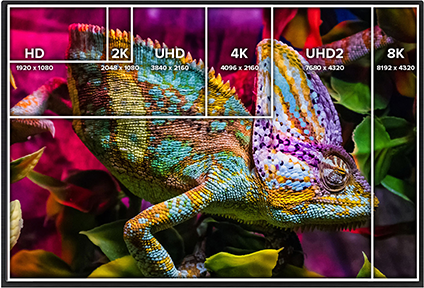

Between taped, pre-produced elements, and live footage, we had to think carefully about how we could blend cinematic and live broadcast looks. We wanted to emulate the capture mechanism of cinema, so we standardized on 1080p HDR, HLG wide color gamut BT.2020. This allowed us to maintain a consistent aesthetic as we transitioned from a pre-produced opener featuring Kevin Costner into the live game broadcast, which was shot with high frame rate cameras and other standard broadcast equipment, without compromising either.

What techniques did you use to achieve such a filmic look?

Tricking shallow depth of field looks with broadcast cameras is possible, but is best realized with specialized film equipment, like cinematic cameras with large sensors and prime lenses, so we had several on hand. We then played with the light levels and changed the apertures. To recreate the color, warmth, and feel of “Field of Dreams” we leaned on our camera technology and AJA gear. The AJA FS-HDR allowed us to create that curve, strike the right balance between the two looks, and move between them as we transitioned between video sources. We also used it to control blacks, whites, gammas, and matrix settings on some devices, and having this color correction ability built into our converter was paramount, considering we used some 1080p SDR and 4K/UHD SDR camera sets as well. FS-HDR helped us match the looks across the board.

How did you concept and test the look prior to production?

Preparations began in early June prior to the all-star game, and it was a collaboration between our postseason baseball group, video team, replay group, lead technical director, and tech group, as well as the technology vendors we work with. We used the all-star game as a workflow testbed to ensure we’d have an accurate picture of what we were walking into. Even though we knew we wanted to go with 1080p HDR, this gave us time to explore other options. The next test phase came once we arrived in Iowa. There, we had to figure out how to mix the look we’d established for the all-star game with the look and feel of the original set and film. We presented options to production, and using their input, made equipment modifications and refined the workflow until we hit the mark – bringing a cinema look to live broadcast; it was one of those beautiful television moments.

What kinds of modifications did the team make to deliver the final live look?

We played with different settings in the equipment and weighed our options. There were times where 24p looked great, but others where it was too jumpy for live game action and, so we opted for 29.97p for many of our specialty cameras, then we had to account for subtleties in the look based on the equipment. Ultimately, this approach ensured we had the right conversion and settings in all the right places and gave us an appreciation for recent technological evolutions. You can now have an RF transmitter that can do 24, 25, 29, 50, 60 and 59.94, which is amazing. It’s the same thing to be said for conversion gear like the FS-HDR, which allows us to determine what the picture will look like, because we can quickly move between looks, line them up, and compare them to get a clear picture of what we’re aiming for. In the end, this was key, because the timeline was tight. We made a number of choices based on research leading up to the production that got us to a concept of where we wanted to be, and once the lighting conditions showed up, we knew what adjustments to make to get to our look.

Tell us more about the technology deployed to achieve the desired outcome.

We leveraged an extensive range of cameras for capture, largely tried and true broadcast cameras, but we also used 12 cinema cameras. The lineup included multiple Sony Venices with PL mount lenses; Sony F55, 4300, 5500, and P50 cameras; and RED cameras, including the KOMODO. All the cinema cameras ran through an AJA FS-HDR frame synchronizer/converter, in some cases with balancing. We also had 1080p-capable POV cameras buried around the infield. In total, we used 60 FS-HDRs across the production with our cameras, a combination that allowed us to create the desired look with full color imagery, balance, and color correction. We produced the feed in 1080p HDR, but some of our international distributors prefer to push out content in other formats. That meant there was a lot of conversion going on in the field, and with FS-HDR, we could easily convert SDR to HDR for production and HDR to SDR for domestic and global distribution. It proved key, as we all know that starting with the highest image quality possible will result in the best final product, and the AJA FS-HDRs helped make that possible.

How’d you weave the warmth of the film aesthetic into the looks you’re creating?

The field lighting was designed for optimal baseball play, not for shooting a film, so we planned to apply pre-defined looks on the live content that matched the original “Field of Dreams” aesthetic. Ultimately, we pulled back on the color correction because we ended up with a natural sky that gave us what we needed. However, taking advantage of this natural light introduced additional challenges. We had to figure out exactly when the sun was going to set, and bridge the look as evening transitioned into night. Having color correction integrated into the workflow sped up the transition process and allowed us to keep more things active in real-time.

Were any presets established ahead of time for the transition from evening to night?

We had rehearsals to get an idea of where we’d start and end. Each day was a little different as we got to the final point and it wasn’t so much about hitting a preset as much as it was resetting for the day, knowing you’re starting at point A and going to point B. We quickly uncovered that we needed to be closer to B earlier on than anticipated and that’s where our master colorists were key. We could create the color palette to ensure everything matched across the board but also to make sure that we achieved the look we wanted from the start and ride it through.

How big was the team on the project?

Our team comprised a group of over 200 team members ranging in discipline, from ENG camera people to drone professionals. For many of us, the project was different in that we had to undo a lot of things we normally do with the ENG crews, drones and specialty film cameras to make sure we weren’t “TVing” them too much.

How do you think this production will influence future sports coverage at FOX and industry-wide?

This project has given us a great game plan going forward for cinematic integration at large scale. When live action and replays can be shown with that same beautiful post-produced look that you’d expect from post produced highlight show in a non jarring way, you can make the game feel bigger than life for fans. We now know how to execute something like this quickly and make subtle tweaks, so in the future, we want to provide this amazing depth of field and color to audiences in live broadcasts where it makes sense. From an industry standpoint, we’re on the cusp of being able to do all the things we want to in sport in high frame rate and with a cinematic look, things that just weren’t achievable live five or ten years ago. We’ve now reached a point where this technique can be applied to other productions on a full-time basis versus just as an added feature for select matches.

Is there anything else you’d like to mention?

Everything we do at FOX is ultimately about the audience, and one thing we’ve always appreciated about our relationship with AJA is the ability to communicate openly and freely about what needs to happen in real-time to get where we need to be for the broadcast. Conversations about combining color correction into format and frame synchronizers are vital to what to push forward the entire industry. It’s important that we find the right people, train them, and give them the tools to achieve their goals, but we also need to listen to those people in the field and hear their challenges so we can figure out how to do things better. We’re incredibly appreciative of everyone in the community pushing technology development to the next level forward as we continue striving to deliver the best experience possible.

About AJA FS-HDR

FS-HDR is a versatile 1RU, rack-mount universal converter/frame synchronizer for real-time HDR transforms as well as 4K/HD up/down/cross conversions. Fusing AJA’s production-proven FS frame synchronization and conversion technology with video and color space processing algorithms from the award-winning Colorfront Engine, FS-HDR matches the real-time, low-latency processing and color fidelity demands that broadcast, OTT, post production and ProAV environments require. www.aja.com/products/fs-hdr.

About AJA Video Systems

Since 1993, AJA Video Systems has been a leading manufacturer of video interface technologies, converters, digital video recording solutions and professional cameras, bringing high quality, cost effective products to the professional broadcast, video and post production markets. AJA products are designed and manufactured at our facilities in Grass Valley, California, and sold through an extensive sales channel of resellers and systems integrators around the world. For further information, please see our website at www.aja.com.

All trademarks and copyrights referenced herein belong to their respective companies.

Mini-Converters

Mini-Converters

Digital Recorders

Digital Recorders

Mobile I/O

Mobile I/O

Desktop I/O

Desktop I/O

Color

Color

Streaming

Streaming

AJA Diskover ME

AJA Diskover ME

IP Video

IP Video

Frame Sync

Frame Sync

openGear

openGear

Routers

Routers

Recording Media

Recording Media

Developer

Developer

Software

Software