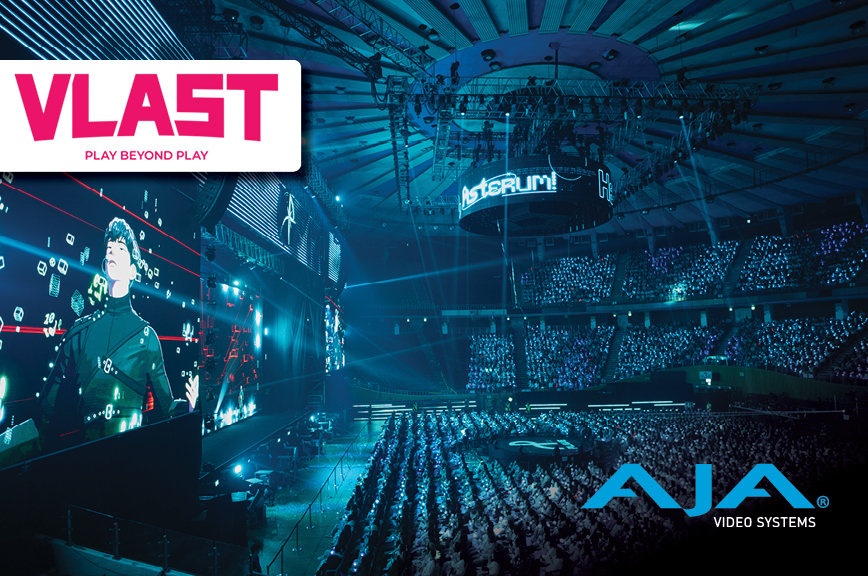

Harnessing the Power of Real-Time Virtual Production

February 9, 2022

Virtual production is transforming the way films, episodic series, and live broadcasts are captured and created. On the cusp of volumetric capture powered by real-time game engines, Dimension Studio bridges creativity and technology to help clients produce world-class content through virtual production workflows. AJA recently spoke with Dimension Studio’s SVP and Director of Virtual Production Jim Geduldick on the current state of the industry, trends and how emerging technologies are poised to revolutionize production workflows.

How has virtual production evolved over the past few years?

Virtual production has been around in various forms for quite some time. These technologies are not new, and there are a lot of great examples of using trans light for interactive lighting, rear projection and front projection that predate my time in the film industry. Looking at projects, like “Oblivion",” “First Man,” “Gravity” and “The Mandalorian,” you see the evolution of these technologies in different forms.

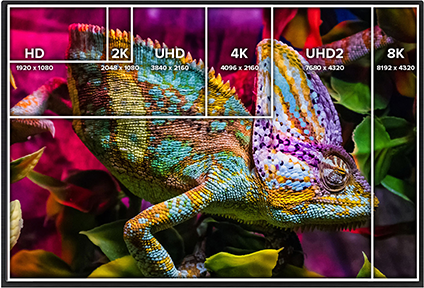

Within the virtual production ecosystem, you have real-time engine technology that people are familiar with in terms of gaming, like Unreal, Unity, and CryEngine. The evolution is the maturity of these tools and applying engines to real-time workflows. Within the past five years, we’ve witnessed monumental advancement of game engine capabilities, as well as hardware with upgraded GPUs and enhanced video I/O.

For creatives and technologists, a robust toolset now exists that allows us to be more proactive with workflows, blending between previs and the virtual art department. The speed at which we can iterate with real-time tools and go from pixel to hardware and up to an LED or traditional greenscreen has rapidly evolved. If you look at the past 18 months alone, virtual production has garnered a lot of excitement for the possibilities it presents and what its future holds.

How do you define your role as a virtual production supervisor?

My position blends the traditional roles of VFX supervisor and DP, and I work as the bridge between different departments to ensure that all teams are satisfied with how the digital and physical set elements come together in real-time.

What are the major advantages of virtual production?

Being able to move some of the decision-making processes to the frontend of production is one of virtual production’s biggest advantages. Does this always result in money saved? I think the buckets do get moved around a bit, but if planned out, prevised and shot correctly, that’s where you can save money. And using real-time game engine technology offers a key advantage, enabling teams to do the planning and storyboarding required for success. This is especially true in a pandemic-compliant environment, where crews are working in a bubble and need to simulate how the set dynamics with personnel would actually work.

Explain how transitioning the decision-making processes to the frontend of production impacts creativity.

Critics of virtual production may argue that it stifles the creative process, because it lacks the visceral experience of shooting on film, or the thrill of going on location and capturing the perfect shot during the magic hour. Playing devil’s advocate, I think it’s important to highlight the freedom that virtual production workflows afford. For continuity and budgetary consideration, shooting on an LED volume offers the ability to capture the same time of day with the same color hue at any given time, allowing actors and crew members to focus more on the performance. You can also switch up your load (or scenes on the volume) and jump around. For example, if you need to do a pickup or if the screenwriter or director adds new lines, you can load the environment, move the set pieces back into place, and shoot the scene. No permits or challenging reshoots are required, freeing up time for teams to focus more on the creative aspects of production, rather than the logistics.

How have actors and crew members responded to virtual production?

Actors are characteristically visual and emotional, and for years, productions have demanded them to stare off into green and blue volumes and deliver powerful performances. Using virtual production technologies, like LED volumes and trans lights, provides them with real background elements to play off of and tell their story. This impacts all key roles on set too, including directors, DPs, VFX supervisors, and even stunt coordinators. If a director or DP sees what the virtual art department has created, they can make an informed decision before asking the line producer or studio for additional budget.

You’ve talked about the Brain Bar before in previous interviews. Can you discuss what this term means?

The Brain Bar is the team of technologists, engineers and creatives who are responsible for collaborating on set and essentially putting the pixels up on the LED walls. This might include part of your virtual art department, your stage lead, LED engineers or staff running the real-time engines. The phrase comes from production on The Mandalorian, which coined a lot of new terms due to the show’s success and the sheer scale of its technological achievement.

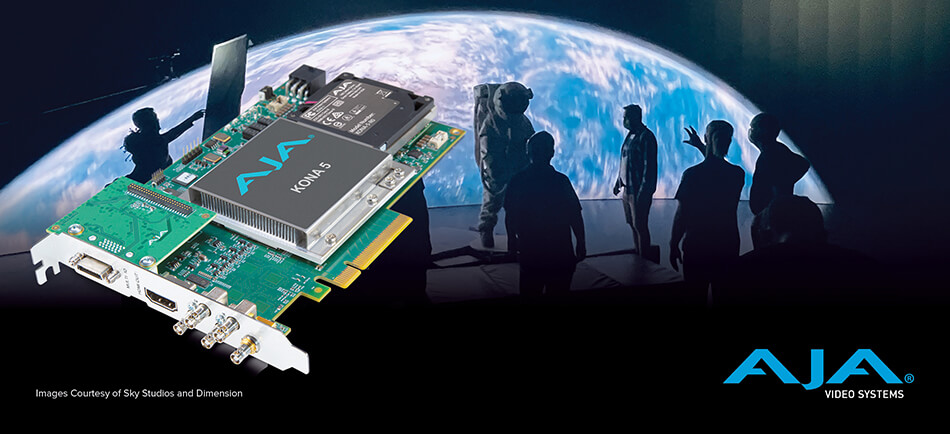

How does AJA gear fit into virtual production workflows?

The essence of real-time workflows is that we’re not waiting for rendering like we used to. Instead, we’re pushing as much down the pipe through AJA hardware and other solutions to make everything as quick and interactive upfront as possible. AJA’s KONA 5 video I/O cards are our workhorses, as we use them to output high-bandwidth, raytraced assets with ease. This ultimately allows us to make on-the-fly creative changes during production, saving studios and clients time and money because we’re capturing in-camera, final effects, rather than waiting until post.

I’ve been an AJA customer for a long-time because of reliability and support. If I’m trying to figure out how to set up a live key or signal flow paths, it’s critical to have good customer support that I can reach out to.

What is your approach to handling errors during production?

Let’s face it, accidents happen. Software can crash, hardware can stop working, a crew member can trip on a power cord, or there might be a power outage, so it’s important to have failsafes in place. Depending on the workflow that we’re using, I might have the engine record certain graphics or metadata coming in from sources. For example, if you have background and foreground elements via a simulcast solution, being able to record that composited image as a safety for editorial dailies and VFX is very helpful.

I’ve also found that being able to ingest video back into our systems through AJA I/O hardware -- like KONA 5 -- leaves us options to send a signal downstream, if we need it. This safetynet allows directors, VFX supervisors, and anyone on set to request and view playback through our system. It’s not a requirement, but since it takes a village to create a TV show, indie film, documentary, or live broadcast, you never know when you may be called on at a very integral time.

How accessible is virtual production to newcomers?

The tools are democratizing and becoming more accessible. There are still components that are priced at a premium, but for someone coming from a traditional production background, it’s easy to get your hands on this. Game engines are free to download and play with, and you can buy or rent a RED camera, Canon C70, Alexa, or even a GoPro to get started. If you only have a laptop, you can easily connect over Thunderbolt with AJA I/O hardware to set up an affordable live key mixed reality environment. It’s a really great time to be in virtual production right now, because things are moving fast and there are ample opportunities to experiment and innovate as creatives and technologists.

Where do you see the future of virtual production taking the M&E industry?

I see the next evolution of virtual production tools driving new innovation in terms of how narratives are driven. The north star is the continual merging of imagination and possibilities, and there are so many resources available to learn how to leverage real-time technologies. There’s never been a richer time for self-education, so I encourage anyone interested in virtual production to dive in and apply a bit of time and effort.

Listen to AJA’s full interview with Jim Geduldick on Sync with AJA Video Systems, and visit Dimension Studio’s website for more information.

Mini-Converters

Mini-Converters

Digital Recorders

Digital Recorders

Mobile I/O

Mobile I/O

Desktop I/O

Desktop I/O

Color

Color

Streaming

Streaming

AJA Diskover ME

AJA Diskover ME

IP Video

IP Video

Frame Sync

Frame Sync

openGear

openGear

Routers

Routers

Recording Media

Recording Media

Developer

Developer

Software

Software